13.2. Detailed OASIS implementation#

13.2.1. In CROCO#

The following routines are specifically built for coupling with OASIS and contain calls to OASIS intrinsic functions:

cpl_prism_init.F: Manage the initialization phase of OASIS3-MCT : local MPI communicatorcpl_prism_define.F: Manage the definition phase of OASIS3-MCT: domain partition, name of exchanged fields as read in the namcouplecpl_prism_grid.F: Manage the definition of grids for the couplercpl_prism_put.F: Manage the sending of arrays from CROCO to the OASIS3-MCT couplercpl_prism_getvar.F: Manage the generic reception from OASIS3-MCT.cpl_prism_get.F: Manage the specificity of each received variable: C-grid position, and field unit transformations

These routines are called in the the code in:

main.F: Initialization, and finalization phasesget_initial.F: Definition phasezoom.F: Initialization phase for AGRIF nested simulationsstep.F: Exchanges (sending and reception) of coupling variables

Other CROCO routines have also been slightly modified to introduce coupling:

testkeys.F: To enable automatic linking to OASIS3-MCT libraries during compilation with jobcompcppdefs.h: Definition of theOA_COUPLINGandOW_COUPLINGcpp-keys, and the other related and requested cpp-keys, asMPIset_global_definitions.h: Definition of cpp-keys in case of coupling (undef OPENMP,define MPI,define MPI_COMM_WORLD ocean_grid_comm: MPI_COMM_WORLD generic MPI communicator is redefined as the local MPI communicator ocean_grid_comm,undef BULK_FLUX: no bulk OA parametrization)mpi_roms.h: Newly added to define variables related to OASIS3-MCT operations. It manage the MPI communicator, using either the generic MPI_COMM_WORLD, either the local MPI communicator created by OASIS3-MCTread_inp.F: Not reading atmospheric forcing files (croco_frc.ncand/orcroco_blk.nc) in OA coupled mode

A schematic picture of the calls in CROCO is (with # name.F indicating the routine we enter in):

# main.F

if !defined AGRIF

call cpl_prism_init

else

call Agrif_MPI_Init

endif

...

call read_inp

...

call_get_initial

# get_initial.F

...

call cpl_prism_define

# cpl_prism_define.F

call prism_def_partition_proto

call cpl_prism_grid

call prism_def_var_proto

call prism_enddef_proto

oasis_time=0

# main.F

...

DO 1:NT

call step

# step.F

if ( (iif==-1).and.(oasis_time>=0).and.(nbstep3d<ntimes) ) then

call cpl_prism_get(oasis_time)

# cpl_prism_get.F

call cpl_prism_getvar

endif

call prestep3d

call get_vbc

...

call step2d

...

call step3d_uv

call step3d_t

iif = -1

nbstep3d = nbstep3d + 1

if (iif==-1) then

if (oasis_time>=0.and.(nbstep3d<ntimes)) then

call cpl_prism_put (oasis_time)

oasis_time = oasis_time + dt

endif

endif

# main.F

END DO

...

call prism_terminate_proto

...

13.2.2. In WW3#

The following routines have been specifically built for coupling with OASIS:

w3oacpmd.ftn: main coupling module with calls to oasis intrinsic functionsw3agcmmd.ftn: module for coupling with an atmospheric modelw3ogcmmd.ftn: module for coupling with an ocean model

The following routines have been modified for coupling with OASIS:

w3fldsmd.ftn: routine that manage input fields, and therefore received fields from the couplerw3wdatmd.ftn: routine that manage data structure for wave model, and therefore time for couplingw3wavemd.ftn: actual wave model, here is located the sending of coupled variablesww3_shel.ftn: main routine managing the wave model, definition/initialisation/partition phases are located here

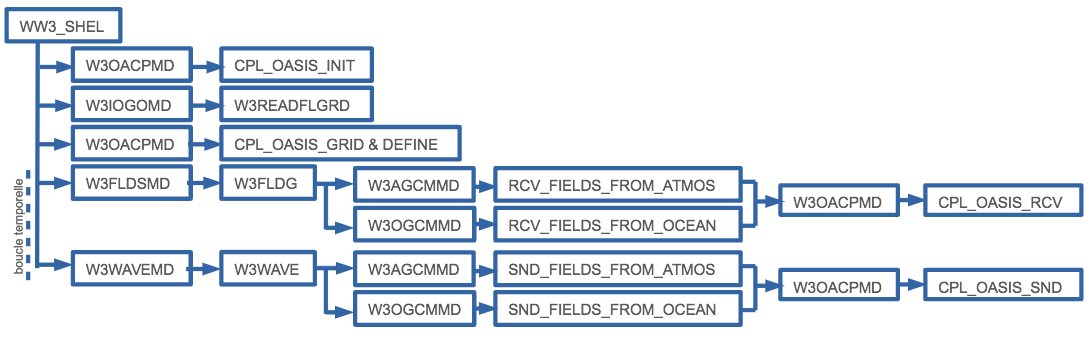

A schematic picture of the calls in WW3 is given here:

13.2.3. In WRF#

The routines specifically built for coupling are:

module_cpl_oasis3.Fmodule_cpl.F

Implementation of coupling with the ocean implies modifications in the following routines:

phys/module_bl_mynn.Fphys/module_bl_ysu.Fphys/module_pbl_driver.Fphys/module_surface_driver.Fphys/module_sf_sfclay.Fphys/module_sf_sfclayrev.F

Implementation of coupling with waves implies modifications in the following routines:

Regristry/Registry.EM_COMMON: CHA_COEF addeddyn/module_first_rk_step_part1.F: CHA_COEF=grid%cha_coef declaration addedframe/module_cpl.F: rcv CHA_COEF addedphys/module_sf_sfclay.Fand..._sfclayrev.F: introduction of wave coupled case: isftcflx=5 as follows:! SJ: change charnock coefficient as a function of waves, and hence roughness ! length IF ( ISFTCFLX.EQ.5 ) THEN ZNT(I)=CHA_COEF(I)*UST(I)*UST(I)/G+0.11*1.5E-5/UST(I) ENDIFphys/module_surface_driver.F: CHA_COEF added in calls to sfclay and sfclayrev and “CALL cpl_rcv” for CHA_COEF

Schematic picture of WRF architecture and calls to the coupling dependencies:

# main/wrf.F

CALL wrf_init

# main/module_wrf_top.F

CALL wrf_dm_initialize

# frame/module_dm.F

CALL cpl_init( mpi_comm_here )

CALL cpl_abort( 'wrf_abort', 'look for abort message in rsl* files' )

CALL cpl_defdomain( head_grid )

# main/wrf.F

CALL wrf_run

# main/module_wrf_top.F

CALL integrate ( head_grid )

# frame/module_integrate.F

CALL cpl_defdomain( new_nest )

CALL solve_interface ( grid_ptr )

# share/solve_interface.F

CALL solve_em ( grid , config_flags ... )

# dyn_em/solve_em.F

curr_secs2 # time for the coupler

CALL cpl_store_input( grid, config_flags )

CALL cpl_settime( curr_secs2 )

CALL first_rk_step_part1

# dyn_em/module_first_rk_step_part1.F

CALL surface_driver( ... )

# phys/module_surface_driver.F

CALL cpl_rcv( id, ... )

u_phytmp(i,kts,j)=u_phytmp(i,kts,j)-uoce(i,j)

v_phytmp(i,kts,j)=v_phytmp(i,kts,j)-voce(i,j)

CALL SFCLAY( ... cha_coef ...)

# phys/module_sf_sfclay.F

CALL SFCLAY1D

IF ( ISFTCFLX.EQ.5 ) THEN

ZNT(I)=CHA_COEF(I)*UST(I)*UST(I)/G+0.11*1.5E-5/UST(I)

ENDIF

CALL SFCLAYREV( ... cha_coef ...)

# phys/module\_sf\_sfclayrev.F

CALL SFCLAYREV1D

IF ( ISFTCFLX.EQ.5 ) THEN

ZNT(I)=CHA_COEF(I)*UST(I)*UST(I)/G+0.11*1.5E-5/UST(I)

ENDIF

# dyn_em/module_first_rk_step_part1.F

CALL pbl_driver( ... )

# phys/module_pbl_driver.F

CALL ysu( ... uoce,voce, ... )

# module_bl_ysu.F

call ysu2d ( ... uox,vox, ...)

wspd1(i) = sqrt( (ux(i,1)-uox(i))*(ux(i,1)-uox(i))

+ (vx(i,1)-vox(i))*(vx(i,1)-vox(i)) )+1.e-9

f1(i,1) = ux(i,1)+uox(i)*ust(i)**2*g/del(i,1)*dt2/wspd1(i)

f2(i,1) = vx(i,1)+vox(i)*ust(i)**2*g/del(i,1)*dt2/wspd1(i)

CALL mynn_bl_driver( ... uoce,voce, ... )

# module_bl_mynn.F

d(1)=u(k)+dtz(k)*uoce*ust**2/wspd

d(1)=v(k)+dtz(k)*voce*ust**2/wspd

# dyn_em/solve_em.F

CALL first_rk_step_part2

# frame/module_integrate.F

CALL cpl_snd( grid_ptr )

# Check where this routine is called...

# frame/module_io_quilt.F # for IO server (used with namelist variable: nio_tasks_per_group

CALL cpl_set_dm_communicator( mpi_comm_local )

CALL cpl_finalize()

# main/wrf.F

CALL wrf_finalize

#main/module_wrf_top.F

CALL cpl_finalize()